Oracle 11gR2 RAC搭建(虚拟机搭建)

|

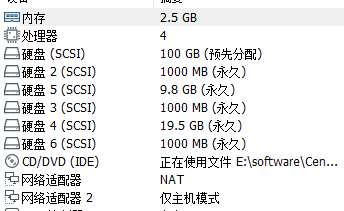

安装环境说明与虚拟机规化:

安装环境 虚拟机规划

虚拟机操作系统配置(没有特别注明都是2台均执行)

使配置生效

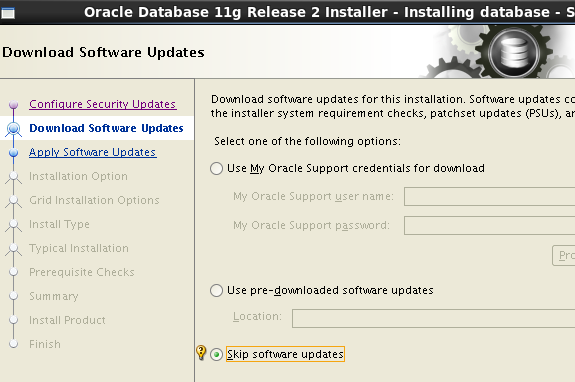

e.使用安装介质作为yum源安装依赖的包 配置本地源的方式,自己先进行配置: #mount /dev/sr0 /mnt/cdrom/ #vi /etc/yum.repos.d/dvd.repo [dvd] name=dvd baseurl=file:///mnt/cdrom gpgcheck=0 enabled=1 #yum clean all #yum makecache #yum install gcc gcc-c++ glibc glibc-devel ksh libgcc libstdc++ libstdc++-devel* make sysstat 4.配置IP和hosts、hostname(这里的hostname在系统安装时已经设定为rac1和rac2,ip由虚拟机自动分配,因此只配hosts),在文件/etc/hosts 的末尾添加如下内容 #vi /etc/hosts 192.168.149.129 rac1 192.168.149.201 rac1-vip 192.168.96.128 rac1-priv 192.168.149.130 rac2 192.168.149.100 scan-ip 5.配置grid和oracle用户环境变量 需要注意的是ORACLE_UNQNAME是数据库名(这里用orcl),创建数据库时指定多个节点是会创建多个实例,ORACLE_SID指的是数据库实例名 7.配置oracle、grid用户ssh互信 #su – oracle $mkdir ~/.ssh $chmod 755 .ssh $/usr/bin/ssh-keygen -t rsa $/usr/bin/ssh-keygen -t dsa 下面步骤只在节点1执行,将所有的key文件汇总到一个总的认证文件中 $ssh rac1 cat ~/.ssh/id_rsa.pub >> authorized_keys $ssh rac2 cat ~/.ssh/id_rsa.pub >> authorized_keys $ssh rac1 cat ~/.ssh/id_dsa.pub >> authorized_keys $ssh rac2 cat ~/.ssh/id_dsa.pub >> authorized_keys $ cd ~/.ssh/ $ scp authorized_keys rac2:~/.ssh/ 拷贝完整的key到节点2 [[email?protected] .ssh]chmod 600 authorized_keys 此处注意是登陆到节点2修改权限 下面步骤2个节点都要执行,否则后续安装会报错,相当重要,得到的结果应该是一个时间 $ssh rac1 date $ssh rac2 date $ssh rac1-priv date $ssh rac2-priv date grid的互信参照oracle用户处理即可 8.配置裸盘 #vi /etc/udev/rules.d/99-oracle-asmdevices.rules ACTION=="add",KERNEL=="sdb1",RUN+="/bin/raw /dev/raw/raw1 %N" ACTION=="add",KERNEL=="sdc1",RUN+="/bin/raw /dev/raw/raw2 %N" ACTION=="add",KERNEL=="sdd1",RUN+="/bin/raw /dev/raw/raw3 %N" ACTION=="add",KERNEL=="sde1",RUN+="/bin/raw /dev/raw/raw4 %N" ACTION=="add",KERNEL=="sdf1",RUN+="/bin/raw /dev/raw/raw5 %N" KERNEL=="raw[1]",MODE="0660",OWNER="grid",GROUP="asmadmin" KERNEL=="raw[2]",GROUP="asmadmin" KERNEL=="raw[3]",GROUP="asmadmin" KERNEL=="raw[4]",GROUP="asmadmin" KERNEL=="raw[5]",GROUP="asmadmin" (4)启动裸设备,2个节点都执行 10.安装用于环境检查的cvuqdisk(双机) #cd /home/grid/db/rpm #rpm -ivh cvuqdisk-1.0.7-1.rpm 11.手动运行cvu使用验证程序验证Oracle集群要求 #su - grid $cd /home/grid/db/ $./runcluvfy.sh stage -pre crsinst -n rac1,rac2 -fixup -verbose 注:这里检查见过出现很多32位(i386或i686)的软件包校验不通过,我直接忽略不处理也没有影响系统使用,因此可以忽略,另外NTP、pdksh的报错也可以忽略,校验时2机报 glibc不存在,因此只能重新打包,从网上下载包 安装Grid,只需要在1个节点执行即可,另外一个节点自动同步 #xhost + #su - grid $export DISPLAY=Windows主机IP:0.0 $cd cd /home/grid/db/ $./runInstaller 图形化流程

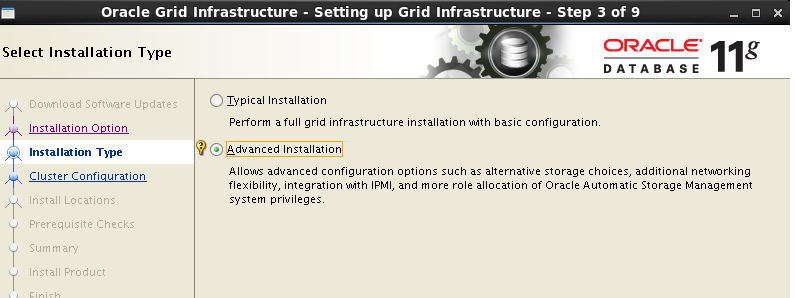

自定义安装

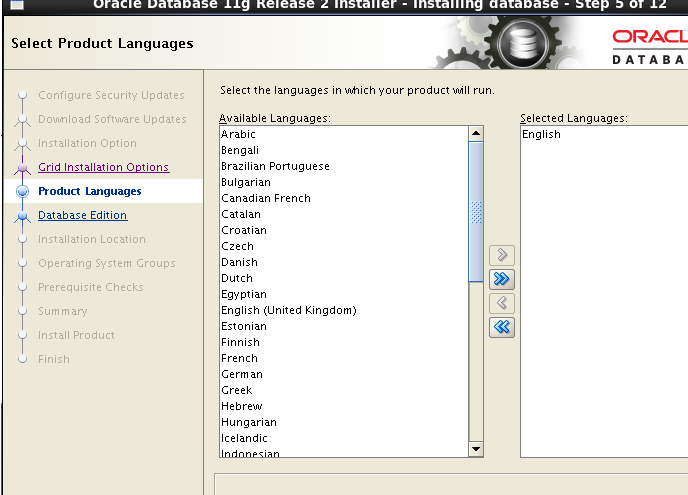

安装语音默认英文

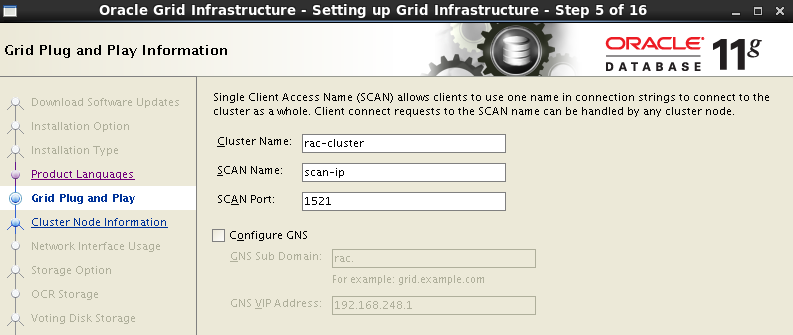

定义集群名字,SCAN Name 为hosts中定义的scan-ip,GNS去勾选

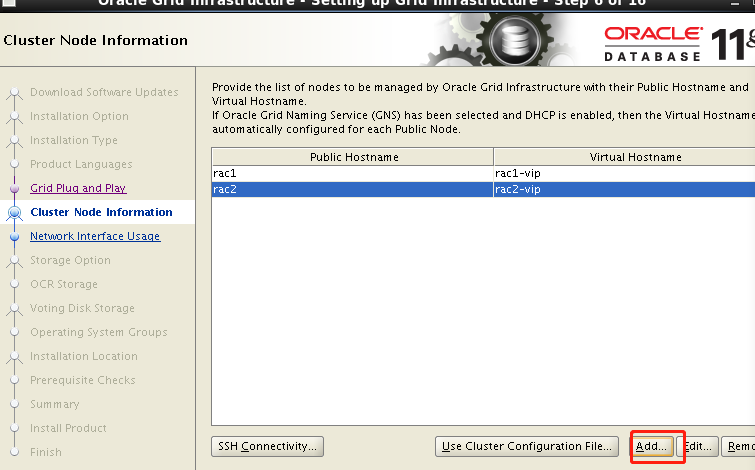

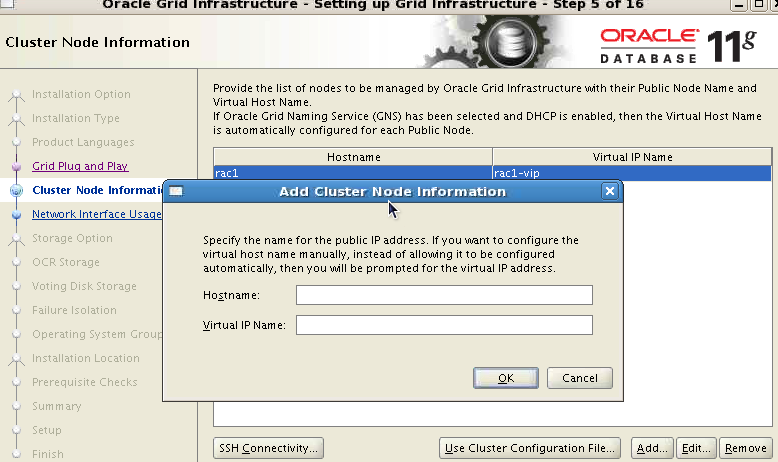

界面只有第一个节点rac1,点击“Add”把第二个节点rac2加上,信息可以参照rac1填写

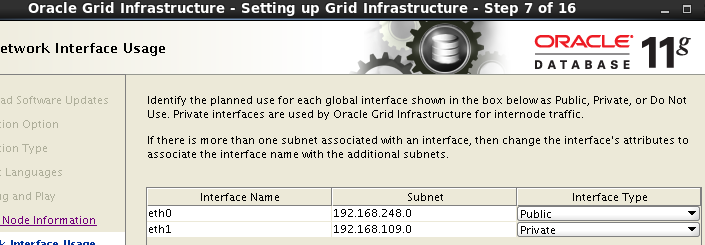

选择网卡,默认即可

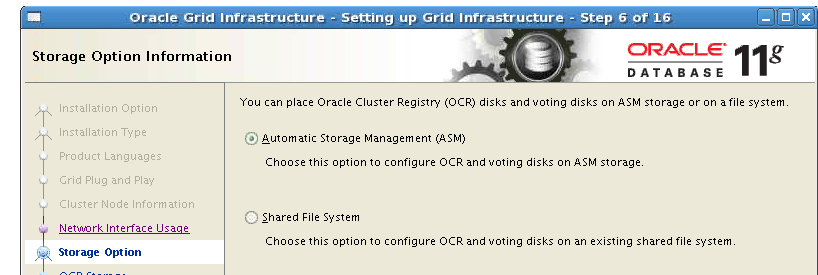

配置ASM,这里选择前面配置的裸盘raw1,raw2,raw3,冗余方式为External即不冗余。因为是不用于,所以也可以只选一个设备。这里的设备是用来做OCR注册盘和votingdisk投票盘的。

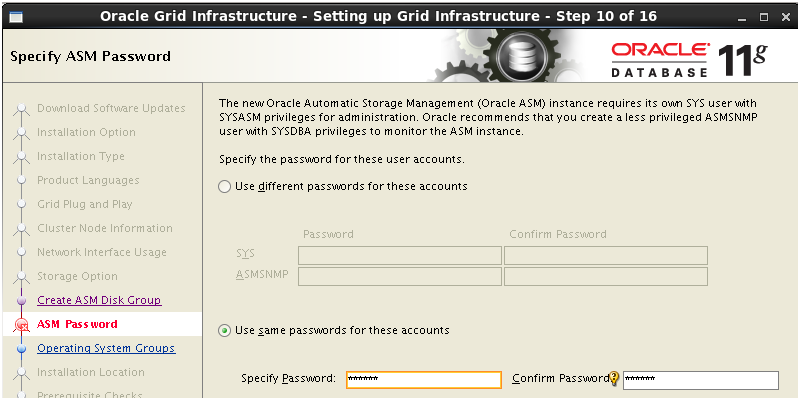

配置ASM实例需要为具有sysasm权限的sys用户,具有sysdba权限的asmsnmp用户设置密码,这里设置统一密码,点击OK即可

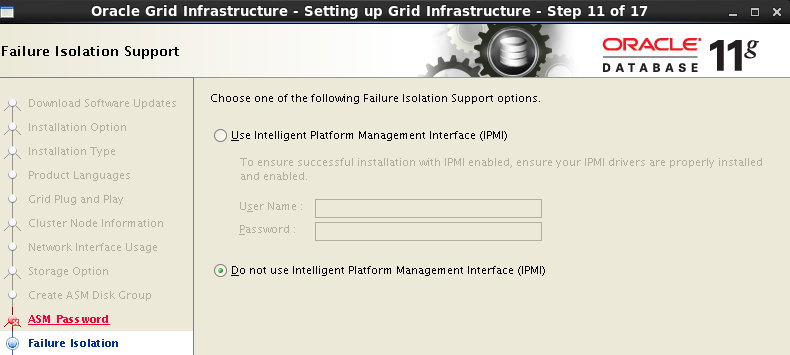

不选择智能管理

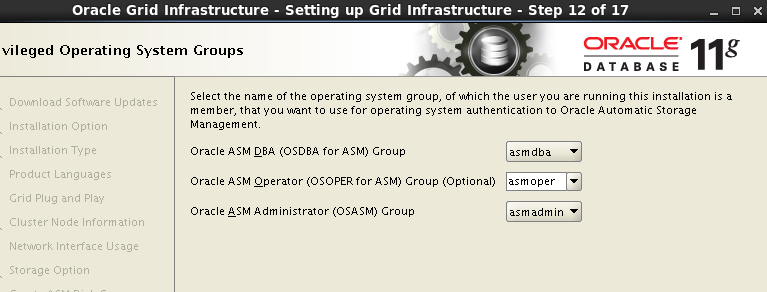

检查ASM实例权限分组情况

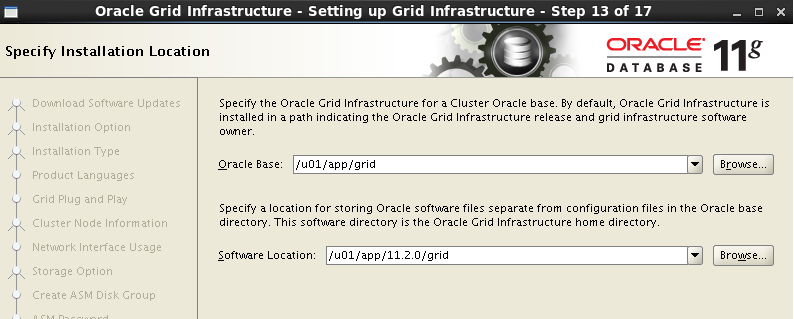

选择grid软件安装路径和base目录 ,这里默认即可

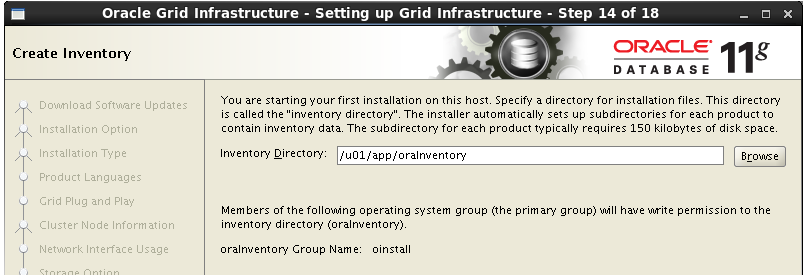

选择grid安装清单目录

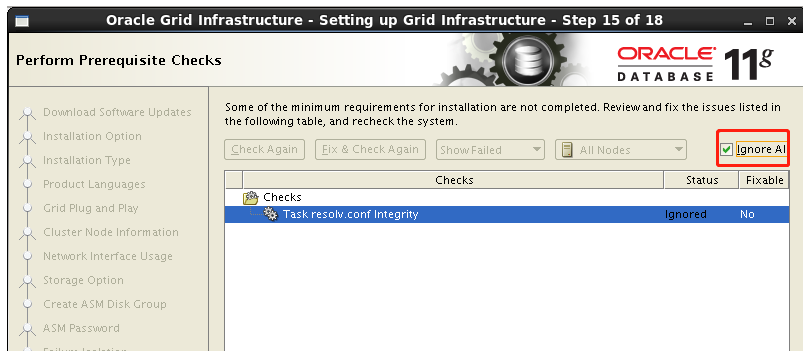

环境检测出现ntp等刚刚跑脚本检测出的错误,选择忽略全部

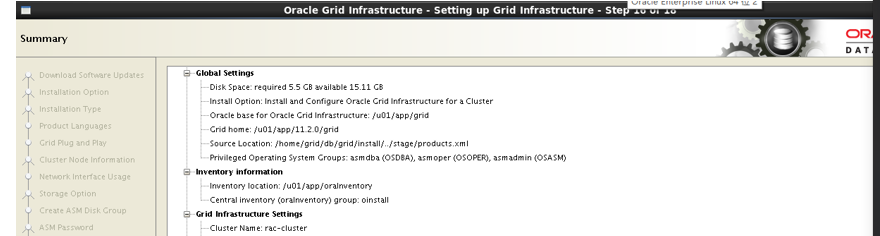

安装概要,点击安装后开始安装并且自动复制到rac2

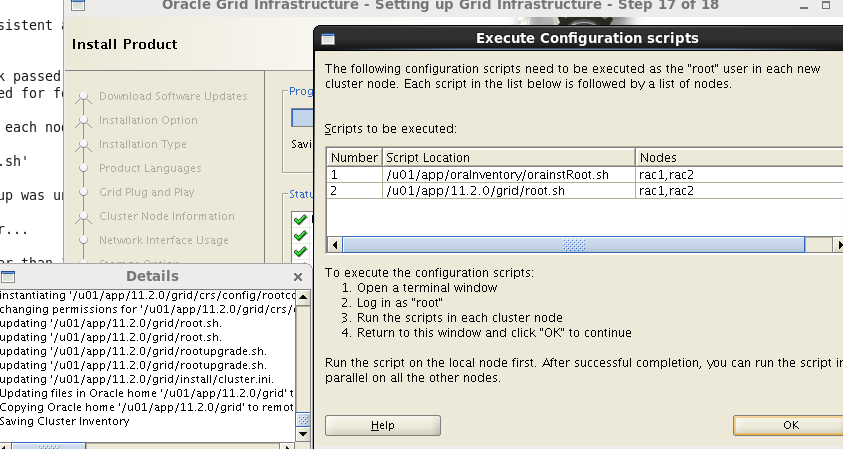

安装grid完成,提示需要root用户依次执行脚本orainstRoot.sh ,root.sh (一定要先在rac1执行完脚本后,才能在其他节点执行)

rac1: #/u01/app/oraInventory/orainstRoot.sh Changing permissions of /u01/app/oraInventory. Adding read,write permissions for group. Removing read,write,execute permissions for world. Changing groupname of /u01/app/oraInventory to oinstall. The execution of the script is complete. #/u01/app/11.2.0/grid/root.sh Performing root user operation for Oracle 11g The following environment variables are set as: ORACLE_OWNER= grid ORACLE_HOME= /u01/app/11.2.0/grid Enter the full pathname of the local bin directory: [/usr/local/bin]: (回车) Copying dbhome to /usr/local/bin ... Copying oraenv to /usr/local/bin ... Copying coraenv to /usr/local/bin ... Creating /etc/oratab file... Entries will be added to the /etc/oratab file as needed by Database Configuration Assistant when a database is created Finished running generic part of root script. Now product-specific root actions will be performed. Using configuration parameter file: /u01/app/11.2.0/grid/crs/install/crsconfig_params Creating trace directory User ignored Prerequisites during installation OLR initialization - successful root wallet root wallet cert root cert export peer wallet profile reader wallet pa wallet peer wallet keys pa wallet keys peer cert request pa cert request peer cert pa cert peer root cert TP profile reader root cert TP pa root cert TP peer pa cert TP pa peer cert TP profile reader pa cert TP profile reader peer cert TP peer user cert pa user cert Adding Clusterware entries to upstart CRS-2672: Attempting to start ‘ora.mdnsd‘ on ‘rac1‘ CRS-2676: Start of ‘ora.mdnsd‘ on ‘rac1‘ succeeded CRS-2672: Attempting to start ‘ora.gpnpd‘ on ‘rac1‘ CRS-2676: Start of ‘ora.gpnpd‘ on ‘rac1‘ succeeded CRS-2672: Attempting to start ‘ora.cssdmonitor‘ on ‘rac1‘ CRS-2672: Attempting to start ‘ora.gipcd‘ on ‘rac1‘ CRS-2676: Start of ‘ora.cssdmonitor‘ on ‘rac1‘ succeeded CRS-2676: Start of ‘ora.gipcd‘ on ‘rac1‘ succeeded CRS-2672: Attempting to start ‘ora.cssd‘ on ‘rac1‘ CRS-2672: Attempting to start ‘ora.diskmon‘ on ‘rac1‘ CRS-2676: Start of ‘ora.diskmon‘ on ‘rac1‘ succeeded CRS-2676: Start of ‘ora.cssd‘ on ‘rac1‘ succeeded ASM created and started successfully. Disk Group OCR created successfully. clscfg: -install mode specified Successfully accumulated necessary OCR keys. Creating OCR keys for user ‘root‘,privgrp ‘root‘.. Operation successful. CRS-4256: Updating the profile Successful addition of voting disk 496abcfc4e214fc9bf85cf755e0cc8e2. Successfully replaced voting disk group with +OCR. CRS-4256: Updating the profile CRS-4266: Voting file(s) successfully replaced STATE File Universal Id File Name Disk group

在rac2用同样的方法跑2个脚本,完成后点击上图中的ok按钮再执行下一步,忽略报错完成安装 #su - grid $ crsctl check crs CRS-4638: Oracle High Availability Services is online Name Type R/RA F/FT Target State Host $ olsnodes -n rac1 1 rac2 2 检查两个节点上的Oracle TNS监听器进程 $ ps -ef|grep lsnr|grep -v ‘grep‘|grep -v ‘ocfs‘|awk ‘{print$9}‘

LISTENER_SCAN1

LISTENER

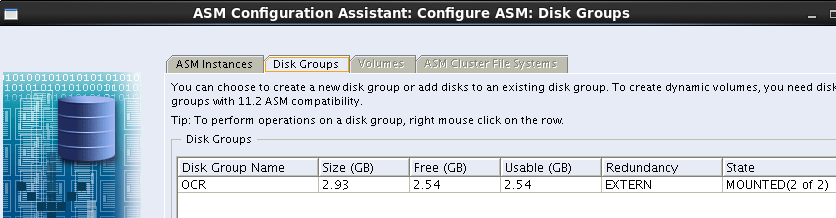

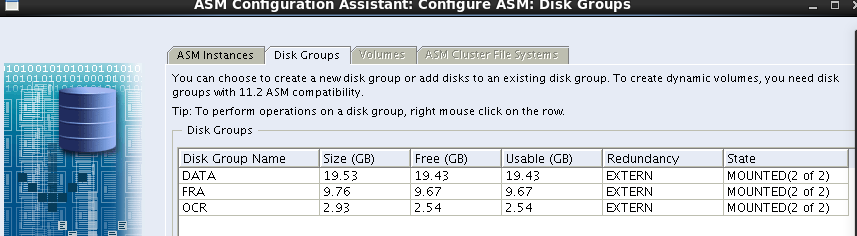

确认针对Oracle Clusterware文件的Oracle ASM功能: $ srvctl status asm -a ASM is running on rac2,rac1 ASM is enabled. 3.为数据和快速恢复去创建ASM磁盘组(只在rac1执行即可) #su - grid $ asmca 这里看到安装grid时配置的OCR盘已存在

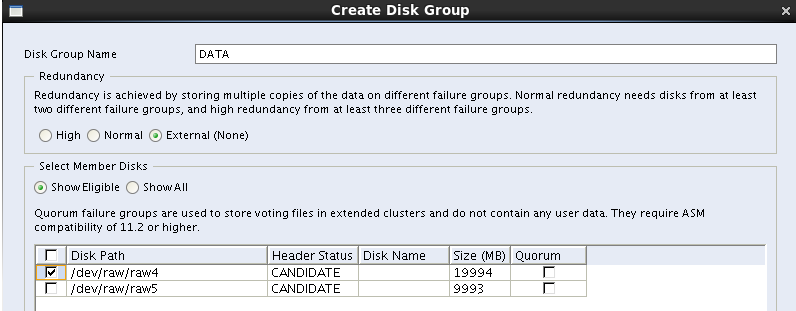

点击create,添加DATA盘,使用裸盘raw4

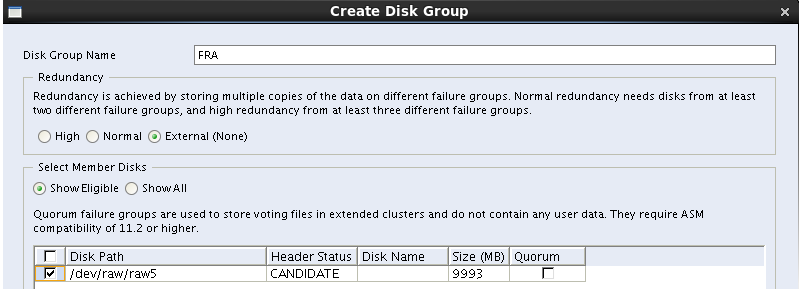

同样创建FRA盘,使用裸盘raw5

ASM磁盘组的情况

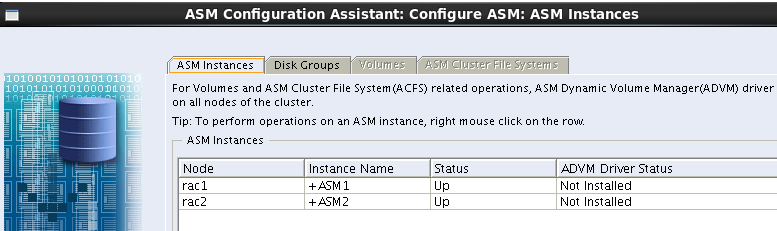

ASM的实例

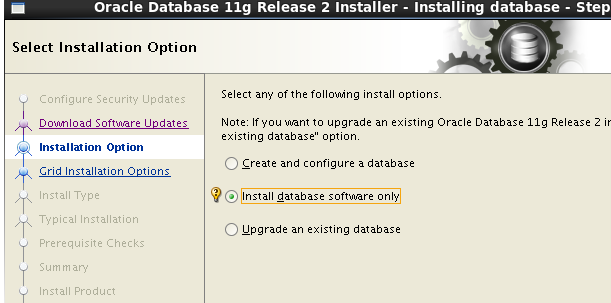

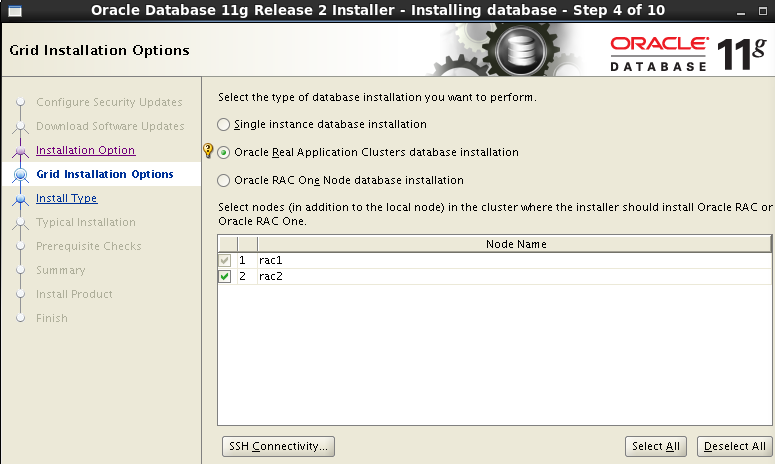

安装Oracle database软件(RAC)

选择Oracel Real Application Clusters database installation按钮(默认),点击select all 按钮 确保勾选所有的节点

默认英文安装

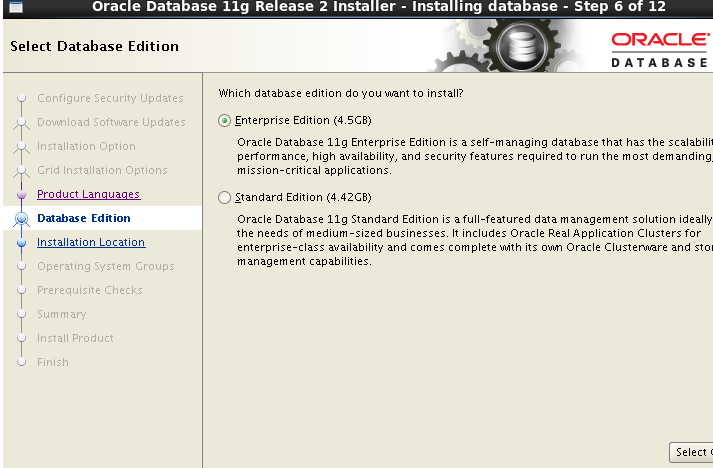

选择安装企业版软件

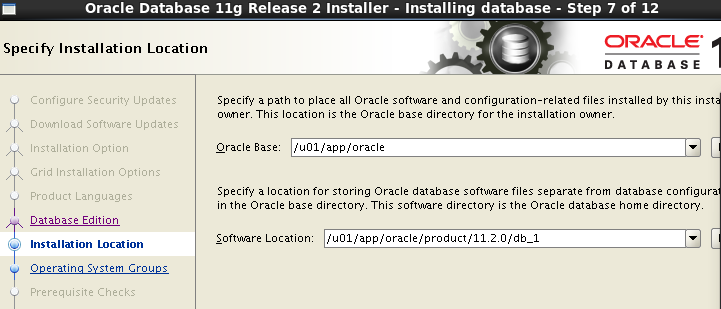

选择安装Oracle软件路径,其中ORACLE_BASE,ORACLE_HOME均选择之前配置好的,默认即可

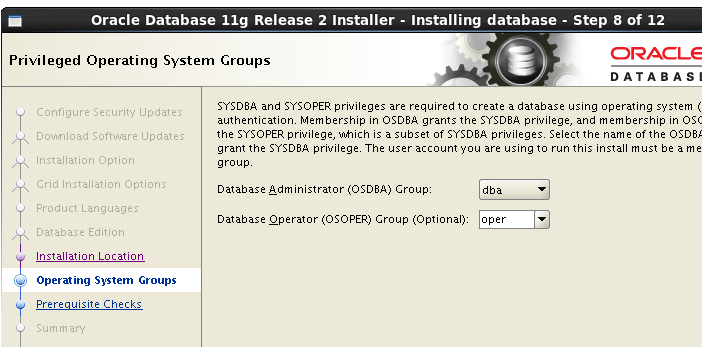

oracle权限授予用户组,默认即可

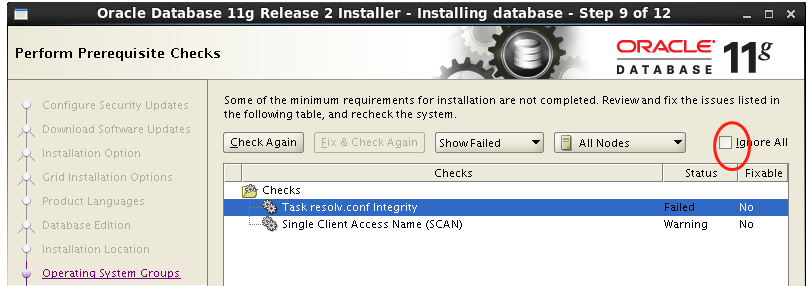

安装前的预检查,忽略所有检查异常项

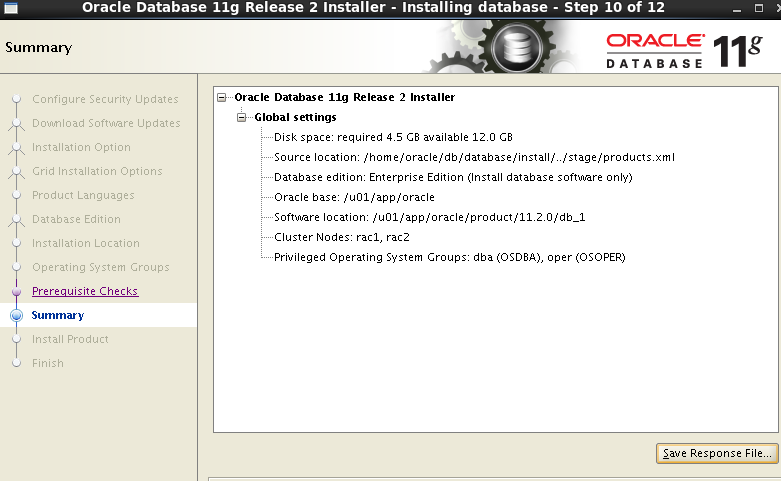

显示安装RAC的概要信息

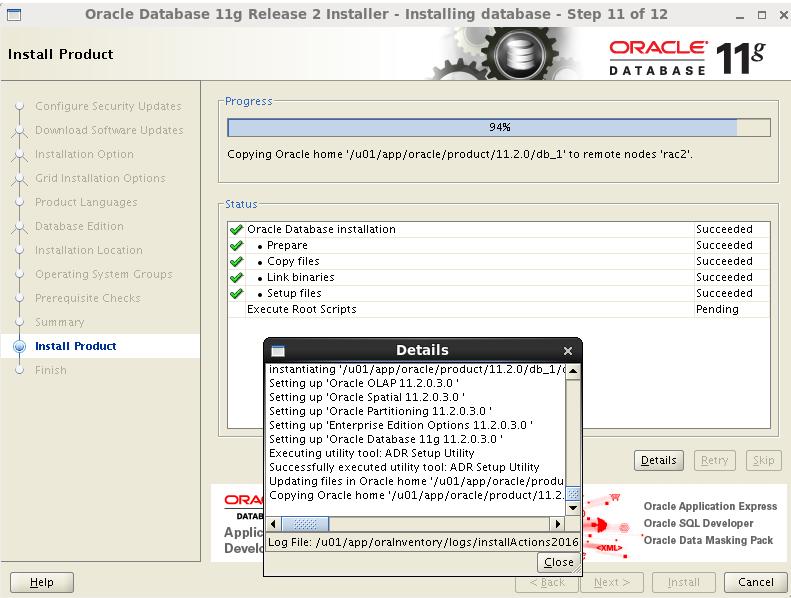

开始安装,会自动复制到其他节点

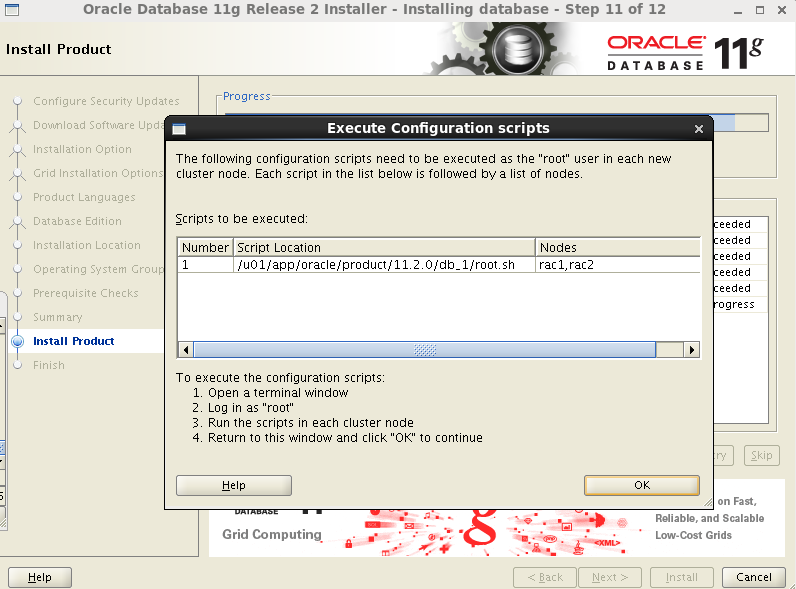

安装过程的异常解决方案: /u01/app/oracle/product/11.2.0/dbhome_1/sysman/lib//libnmectl.a(nmectlt.o): In function nmectlt_genSudoProps‘:<br/>nmectlt.c:(.text+0x76): undefined reference toB_DestroyKeyObject‘nmectlt.c:(.text+0x7f): undefined reference to B_DestroyKeyObject‘<br/>nmectlt.c:(.text+0x88): undefined reference toB_DestroyKeyObject‘nmectlt.c:(.text+0x91): undefined reference to `B_DestroyKeyObject‘ INFO: collect2: error: ld returned 1 exit status INFO: /u01/app/oracle/product/11.2.0/dbhome_1/sysman/lib/ins_emagent.mk:176: recipe for target ‘/u01/app/oracle/product/11.2.0/dbhome_1/sysman/lib/emdctl‘ failed make[1]: Leaving directory ‘/u01/app/oracle/product/11.2.0/dbhome_1/sysman/lib‘ INFO: /u01/app/oracle/product/11.2.0/dbhome_1/sysman/lib/ins_emagent.mk:52: recipe for target ‘emdctl‘ failed INFO: make[1]: [/u01/app/oracle/product/11.2.0/dbhome_1/sysman/lib/emdctl] Error 1 INFO: make: [emdctl] Error 2 INFO: End output from spawned process. INFO: ---------------------------------- INFO: Exception thrown from action: make Exception Name: MakefileException Exception String: Error in invoking target ‘agent nmhs‘ of makefile ‘/u01/app/oracle/product/11.2.0/dbhome_1/sysman/lib/ins_emagent.mk‘. See ‘/u01/app/oraInventory/logs/installActions2017-05-02_12-37-15PM.log‘ for details. Exception Severity: 1 方案: 修改“emdctl”的编译参数,编辑“/u01/app/oracle/product/11.2.0/dbhome_1/sysman/lib/ins_emagent.mk”文件,将 171 #=========================== 172 # emdctl 173 #=========================== 174 175 $(SYSMANBIN)emdctl: 176 $(MK_EMAGENT_NMECTL) 改为 171 #=========================== 172 # emdctl 173 #=========================== 174 175 $(SYSMANBIN)emdctl: 176 $(MK_EMAGENT_NMECTL) -lnnz11 然后点击retry NFO: Exception thrown from action: make Exception Name: MakefileException Exception String: Error in invoking target ‘irman ioracle‘ of makefile ‘/u01/app/oracle/product/11.2.0/db_1/rdbms/lib/ins_rdbms.mk‘. See ‘/u01/app/oraInventory/logs/installActions2019-04-30_03-12-13PM.log‘ for details. 解决方法如下: cd $ORACLE_HOME/rdbms/admin /usr/bin/make -f $ORACLE_HOME/rdbms/lib/ins_rdbms.mk irman 然后点击retry 安装完,在每个节点用root用户执行脚本

#/u01/app/oracle/product/11.2.0/db_1/root.sh Performing root user operation for Oracle 11g The following environment variables are set as: ORACLE_OWNER= oracle ORACLE_HOME= /u01/app/oracle/product/11.2.0/db_1 Enter the full pathname of the local bin directory: [/usr/local/bin]: (回车) The contents of "dbhome" have not changed. No need to overwrite. The contents of "oraenv" have not changed. No need to overwrite. The contents of "coraenv" have not changed. No need to overwrite. Entries will be added to the /etc/oratab file as needed by Database Configuration Assistant when a database is created Finished running generic part of root script. Now product-specific root actions will be performed. Finished product-specific root actions. 执行过程出错: CRS-4124: Oracle High Availability Services startup failed. CRS-4000: Command Start failed,or completed with errors. ohasd failed to start: Inappropriate ioctl for device ohasd failed to start at /u01/app/11.2.0/grid/crs/install/rootcrs.pl line 443. 这是11.0.2.1的经典问题,一个bug,解决办法也很简单,开2个窗口一个跑root.sh在执行root.sh命令出现Adding daemon to inittab的时候在另外一个窗口反复执行/bin/dd if=/var/tmp/.oracle/npohasd of=/dev/null bs=1024 count=1 直到没有出现/bin/dd: opening`/var/tmp/.oracle/npohasd‘: No such file or directory 重新执行root.sh之前别忘了删除配置:/u01/app/11.2.0/grid/crs/install/roothas.pl -deconfig -force-verbose 参考: http://www.voidcn.com/article/p-hegzylwu-bmn.html 到此完成数据库软件安装

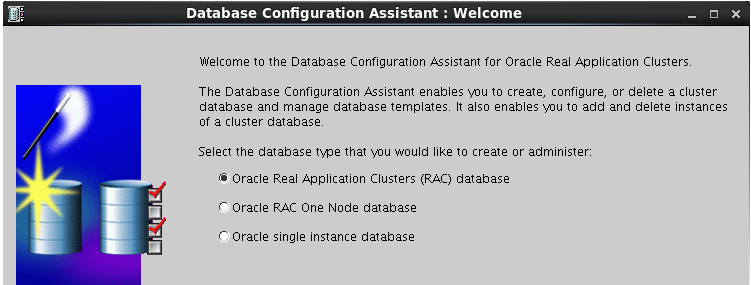

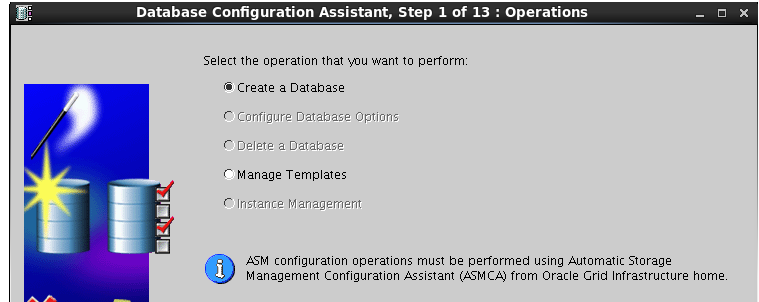

这里如果看到的部署RAC安装可能是RAC没有启动,grid用户检查RAC资源 $ crsctl check crs CRS-4639: Could not contact Oracle High Availability Services $ crs_stat -t -v CRS-0184: Cannot communicate with the CRS daemon. 看到没有起点,可以在root用户下手动拉起服务 #/etc/init.d/init.ohasd run >/dev/null 2>&1 </dev/null 选择创建数据库

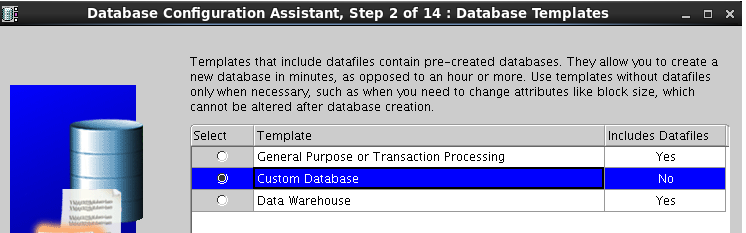

选择自定义数据库(也可以是通用)

配置类型选择Admin-Managed,输入全局数据库名orcl,每个节点实例SID前缀为orcl,选择双节点

选择默认,配置OEM,启用数据库自动维护任务

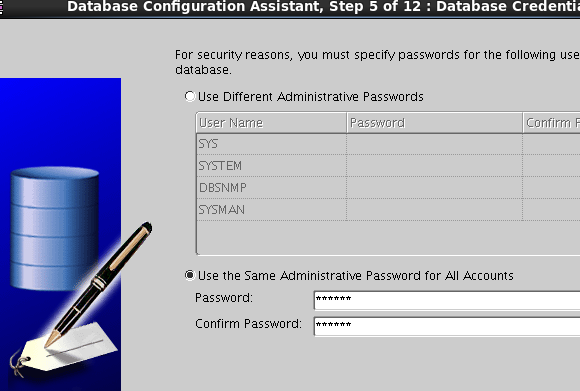

统一设置sys,system,dbsnmp,sysman用户的密码为oracle

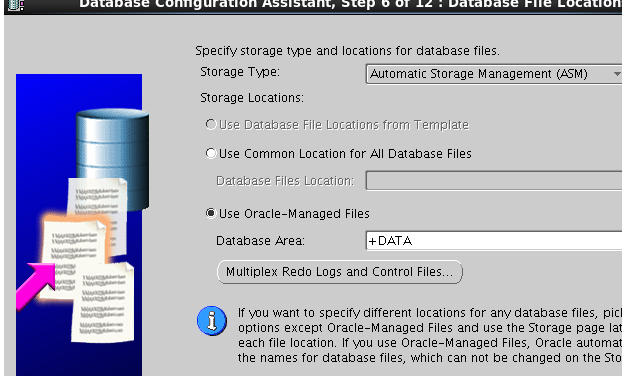

使用ASM存储,使用OMF(oracle的自动管理文件),数据区选择之前创建的DATA磁盘组 ( a.这里有可能出现没有磁盘的问题,参考解决方案https://www.cnblogs.com/cqubityj/p/6828946.html 本次是修改了grid下oracle的权限6751 b.还有可能出现磁盘没有挂载的情况,解决方案: $ asmcmd asmcmd>lsdg --查看当前挂载情况 asmcmd>mount DATA 挂载名为data的磁盘)

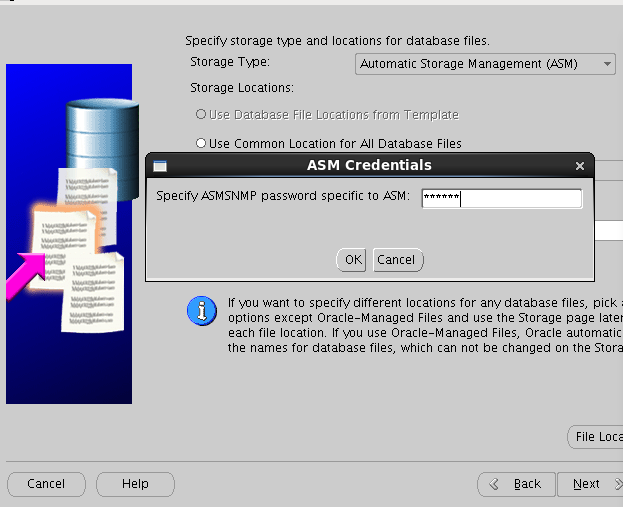

设置ASM密码为oracle_4U (这里会报"ORA-01031: insufficient privileges" 先删除文件/u01/app/11.2.0/grid/dbs/orapw+ASM,然后新生成文件,此时的密码为oracle_4U [[email?protected] bin]$ orapwd file=/u01/app/11.2.0/grid/dbs/orapw+ASM password=oracle_4U [[email?protected] dbs]$ scp orapw+ASM Node2:/u01/app/11.2.0/grid/dbs/ --拷贝到另外一个节点 [[email?protected] bin]$ sqlplus / as sysasm SQL>create user asmsnmp identified by oracle_4U; SQL> grant sysdba to asmsnmp; 然后点击retry即可)

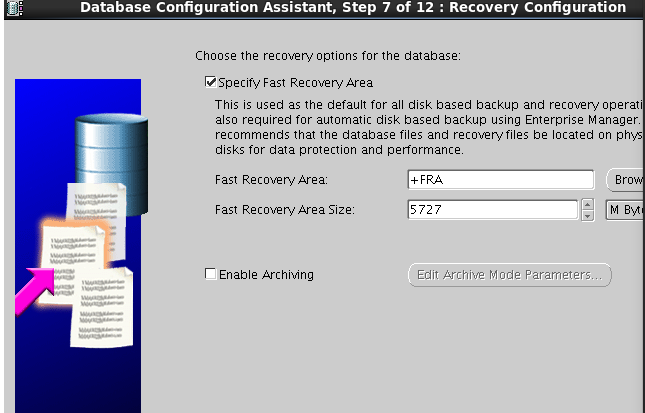

指定数据闪回区,选择之前创建好的FRA磁盘组,不开归档

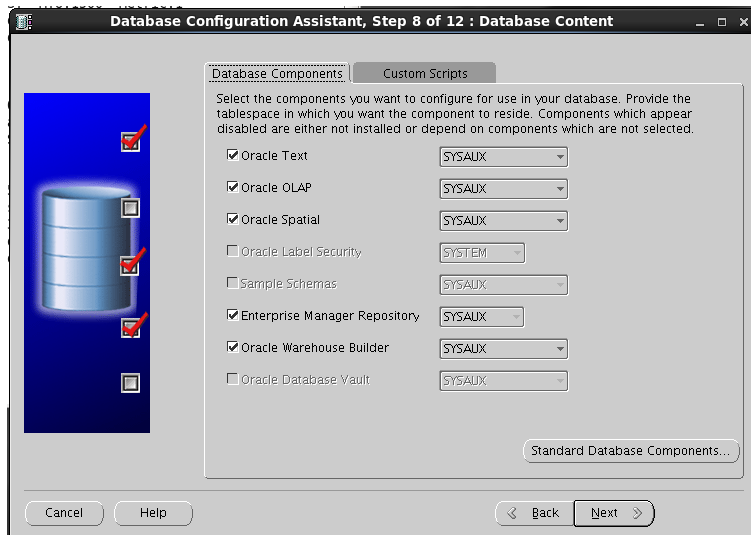

组件选择,默认

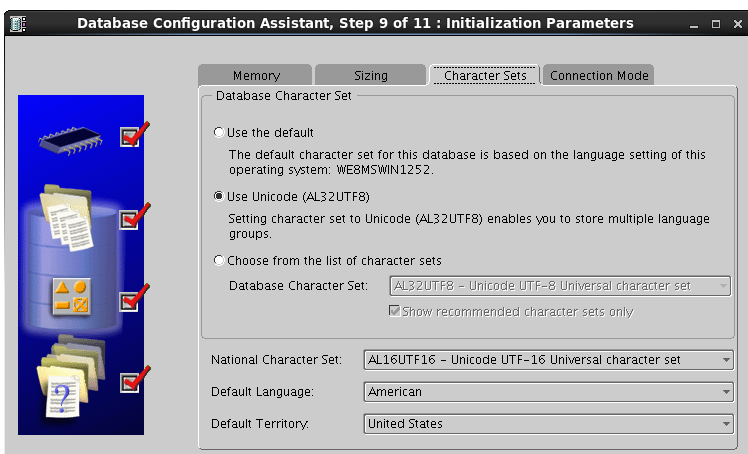

选择字符集AL32UTF8

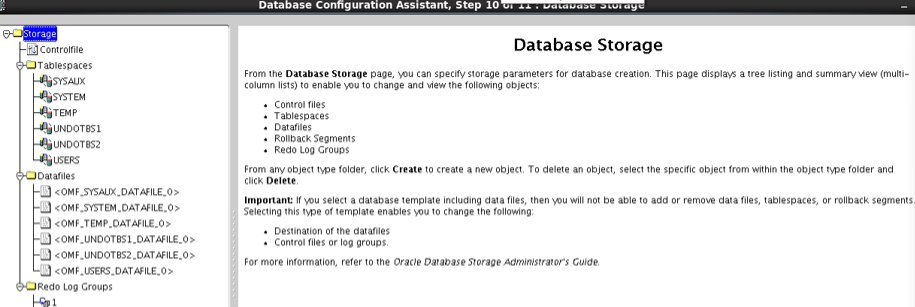

选择默认的数据存储信息

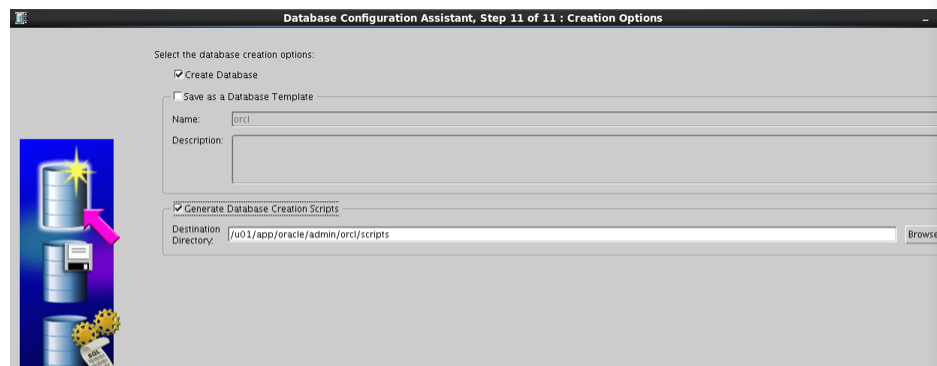

开始创建数据库,勾选生成数据库的脚本

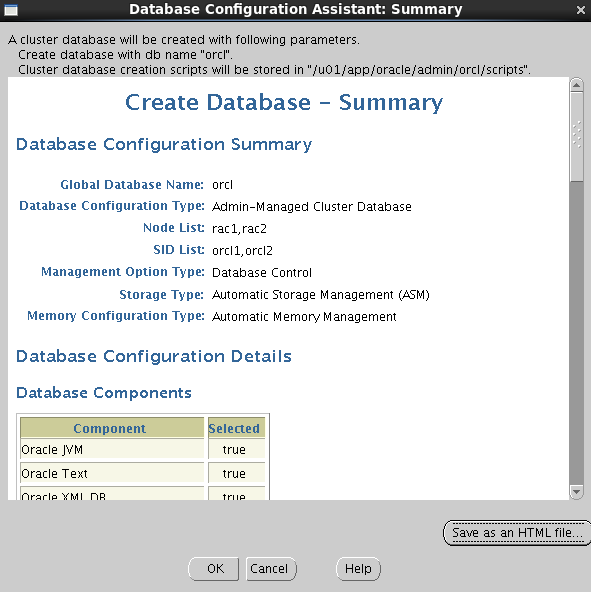

数据库的概要信息

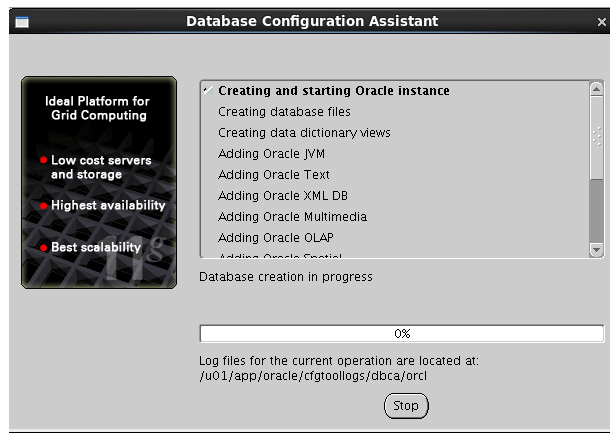

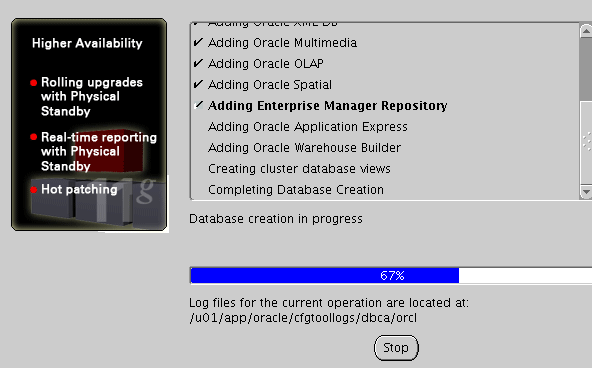

开始安装

安装过程可能出现的问题:ORA-12547: TNS:lost contact 检查$ORACLE_HOME/bin/oracle 和 $ORACLE_HOME/rdbms/lib/config.o 的文件大小是否为0, 如果大小为0,需要重新编译oracle软件: [[email?protected] backup]$ relink all writing relink log to: /u01/app/oracle/product/11.2.0/db_1/install/relink.log 完成数据库实例安装

RAC维护 2.检查集群状态 $srvctl status database -d orcl Instance orcl1 is running on node rac1 Instance orcl2 is running on node rac2 3.检查CRS状态 $crsctl check crs CRS-4638: Oracle High Availability Services is online CRS-4537: Cluster Ready Services is online CRS-4529: Cluster Synchronization Services is online CRS-4533: Event Manager is online 集群 $ crsctl check cluster CRS-4537: Cluster Ready Services is online CRS-4529: Cluster Synchronization Services is online CRS-4533: Event Manager is online 4.查看集群中节点配置信息 $ olsnodes rac1 rac2 $ olsnodes -n rac1 1 rac2 2 $ olsnodes -n -i -s -t rac1 1 rac1-vip Active Unpinned rac2 2 rac2-vip Active Unpinned 5.查看集群件的表决磁盘信息 .$ crsctl query css votedisk STATE File Universal Id File Name Disk group 1. ONLINE 496abcfc4e214fc9bf85cf755e0cc8e2 (/dev/raw/raw1) [OCR] Located 1 voting disk(s). 6.查看集群SCAN VIP信息(参考示例) $ srvctl config scan SCAN name: scan-ip,Network: 1/192.168.248.0/255.255.255.0/eth0 SCAN VIP name: scan1,IP: /scan-ip/192.168.248.110 $ srvctl config scan_listener SCAN Listener LISTENER_SCAN1 exists. Port: TCP:1521 7.启、停集群数据库 $ srvctl stop database -d orcl $ srvctl status database -d orcl $ srvctl start database -d orcl 关闭所有节点 进入root用户 关闭所有节点 # /u01/app/11.2.0/grid/bin/crsctl stop crs 实际只关闭了当前结点 EM管理(oracle用户,其中一个节点执行即可,访问地址https://节点IP:1158/em 用户名密码用上文设置的system之类的) $ emctl status dbconsole $ emctl start dbconsole $ emctl stop dbconsole 本地sqlplus连接 这里的HOST写的是scan-ip sqlplus sys/[email?protected]_ORCL as sysdba SQL*Plus: Release 11.2.0.1.0 Production on 星期四 4月 14 14:37:30 2016 Copyright (c) 1982,2010,Oracle. All rights reserved. 连接到: INSTANCE_NAME STATUS orcl1 OPEN 当开启第二个命令行窗口连接时,发现实例名为orcl2,可以看出,scan-ip的加入可以具有负载均衡的作用。 如果连接的时候有报错:ORA-12545 NAME TYPE VALUE local_listener string (ADDRESS=(PROTOCOL=TCP)(HOST=scan-vip)(PORT=1521)) 修改local_listener主机名为VIP-IP地址 System altered. SQL> alter system set local_listener=‘(ADDRESS=(PROTOCOL=TCP)(HOST= 192.168.149.201)(PORT=1521))‘ sid=‘orcl1‘; System altered. 容灾演练(模拟单机运行) (编辑:李大同) 【声明】本站内容均来自网络,其相关言论仅代表作者个人观点,不代表本站立场。若无意侵犯到您的权利,请及时与联系站长删除相关内容! |